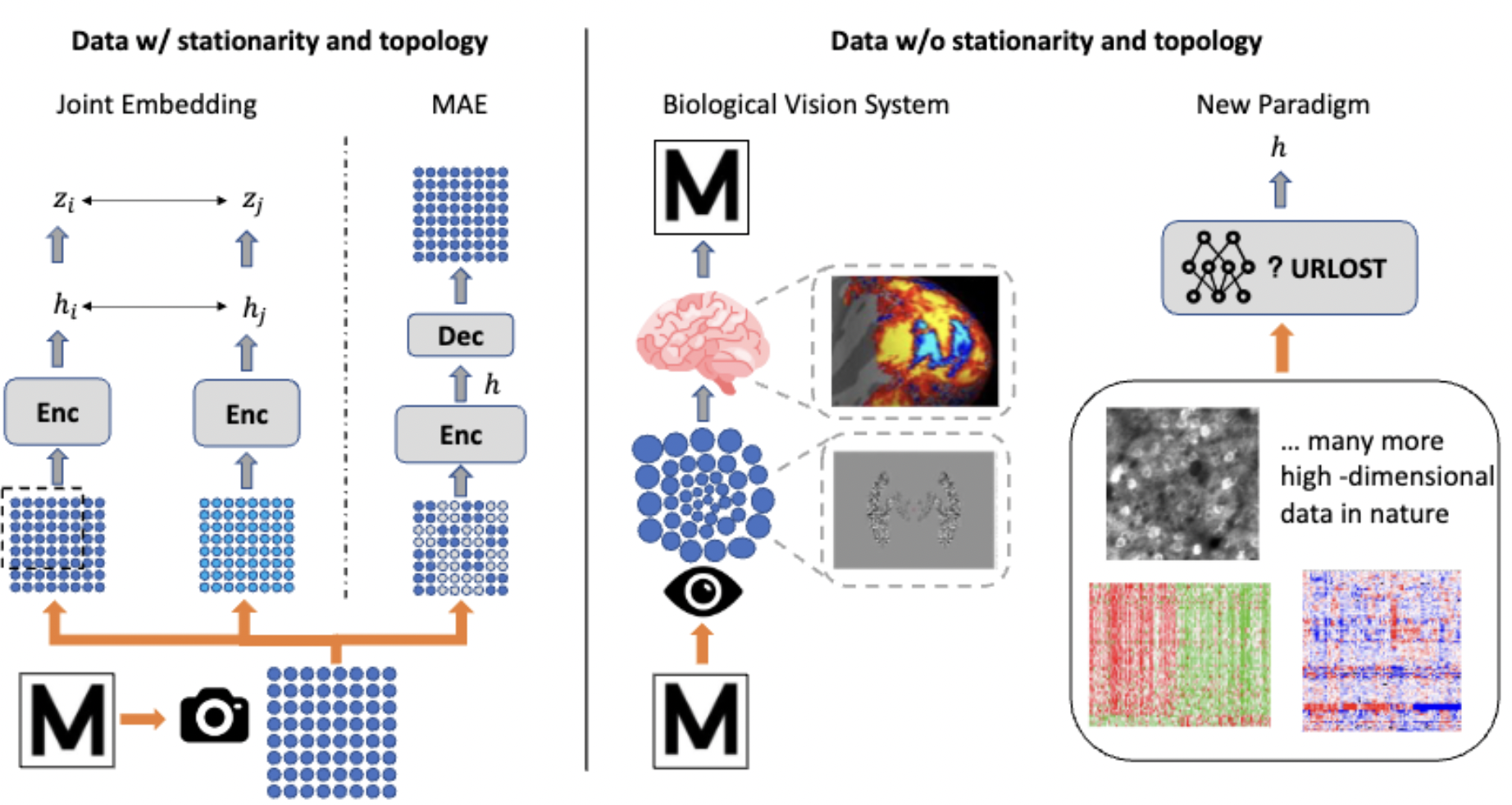

URLOST: Unsupervised representation learning without stationarity or topology

[arXiv]ICLR 2025

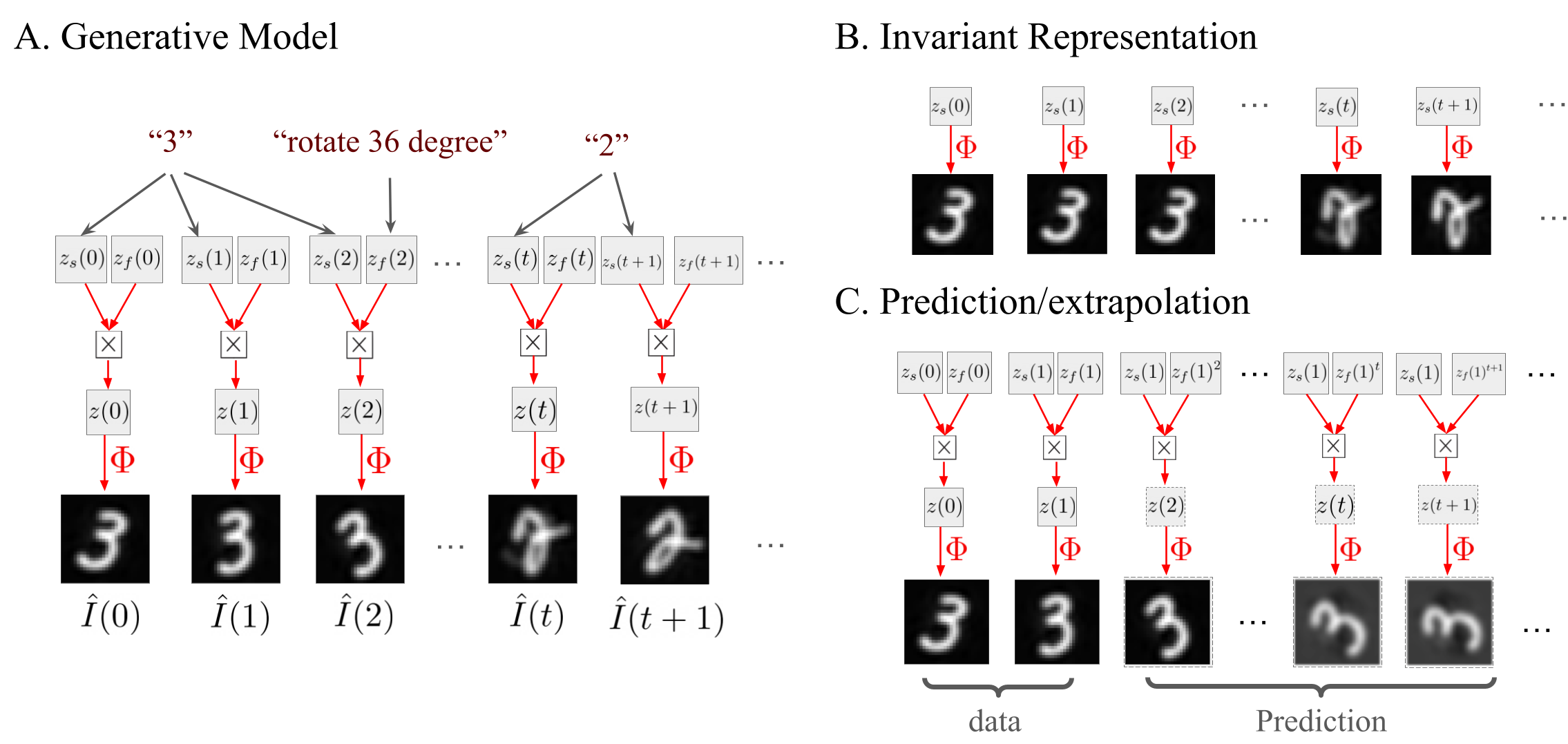

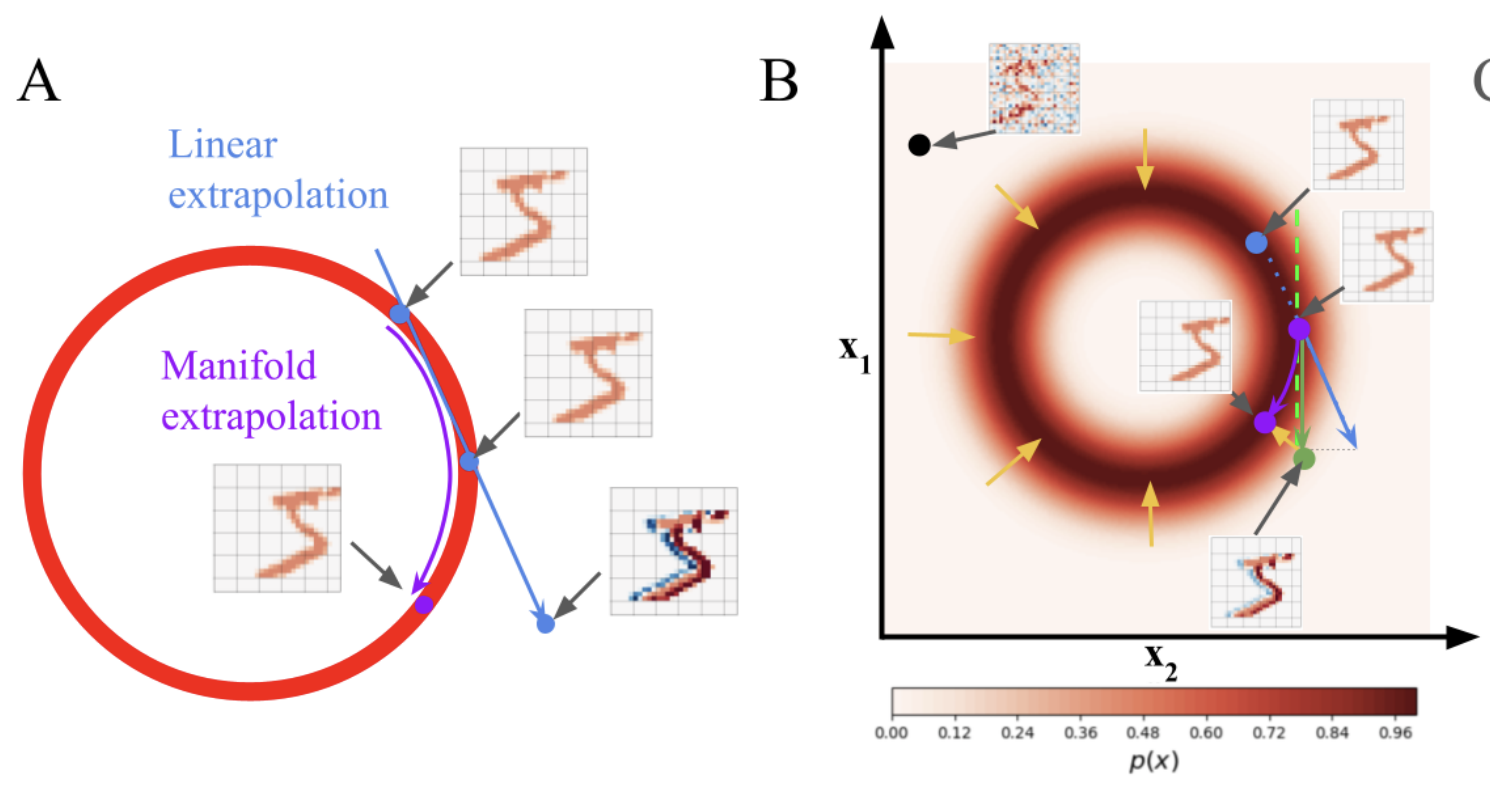

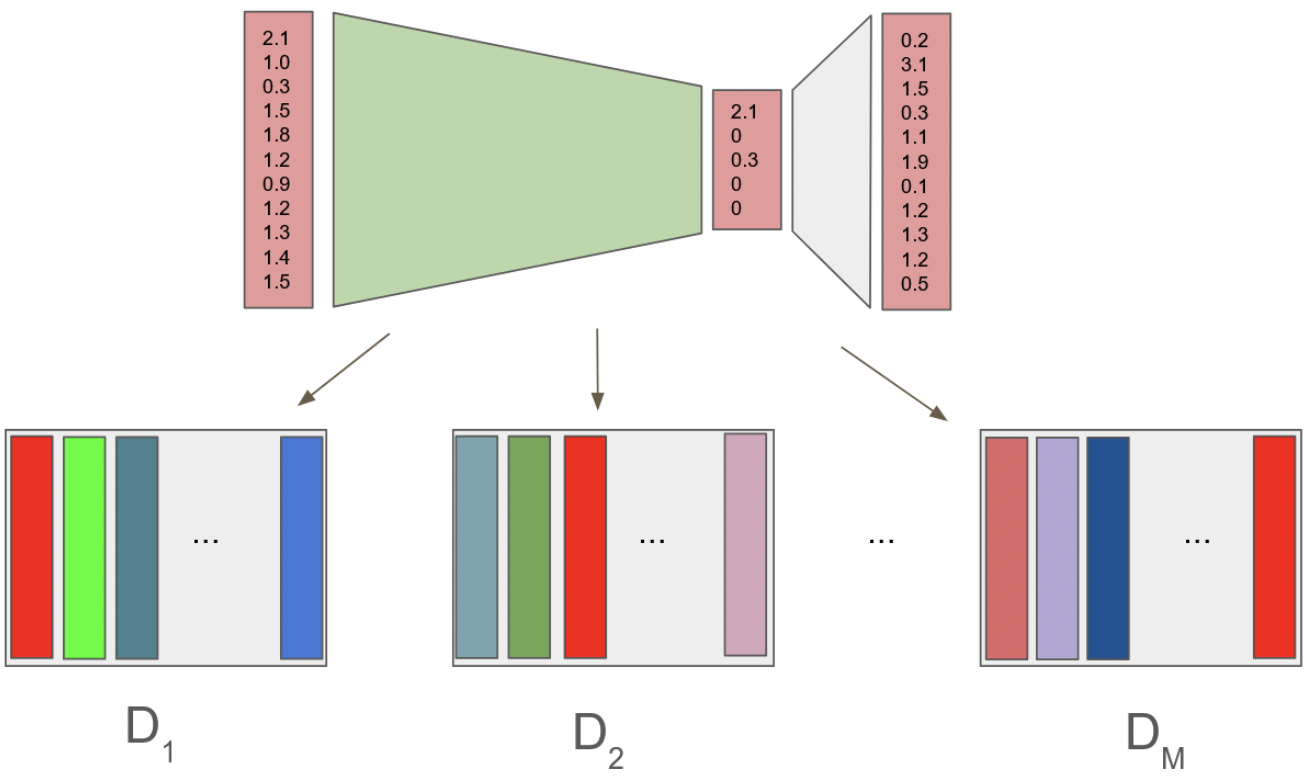

We developed an unsupervised learning model for generic high dimensional data. The model demonstrated exceptional performance across diverse data modalities from neural recording to gene expression.